This article was originally published in The Interline’s AI Report 2024. To read other opinion pieces, exclusive editorials, and detailed profiles and interviews with key vendors, download the full AI Report 2024 completely free of charge and ungated.

Key Takeaways:

- AI is significantly transforming fashion design, presentation, production, and purchasing, enabling brands to improve or potentially sidestep traditional 3D and digital product creation workflows.

- While open AI models provide accessibility and creativity by leveraging vast public datasets, closed AI models offer greater control, brand consistency, and data privacy by using proprietary data.

- The apparel and footwear industries are likely to develop more specialised AI models tailored to specific garment types and brand identities, balancing the benefits of general-purpose and expert AI models.

That title might feel like a blunt statement. But the fact is that Artificial Intelligence is already changing the way fashion is designed, presented, produced and purchased – in both obvious and hidden ways. As the common tech refrain goes, this is a super fast-moving train – you either jump on board or get run over by it. And there is very little time for companies to adjust their strategies in either direction. What might this mean for one of fashion’s biggest centres of digital transformation (3D and digital product creation)? And what indicators can that give us for how significant the impact of AI could be across the full scope of existing and new digital transformation initiatives?

How digital transformation differs from digital product creation

Over the last twenty years, our industry has witnessed a complete paradigm shift away from predominantly physical sampling of garments, footwear, accessories, jewelry, watches, and other categories. For many brands, the majority of samples are either already digital, or are targeted to become digital as part of multi-year strategic transformation projects.

And today, associated processes like market research, trend analysis, merchandising, buying, design, development, and more recently upstream manufacturing are shifting to a new digital best-practice model. Physical sampling is becoming a second-best approach, driven by the need for speed, sustainability, and cost. If you want to be quick to market, with a reduced footprint and the right margins, the more you can do digitally – using 3D design, simulation, and visualisation tools – the better.

For most of the fashion workforce, that shift from physical to digital still feels “new” even if the core principles behind 3D design have been consistent for decades. Many brands are, right now, actively scaling their 3D departments in design, technical design, engineering, and downstream content creation, and 3D artists are amongst fashion’s most sought-after talent. So the assumption would be that this way of working, feeling close to the cutting edge of digital transformation, is as safe as any workflow can be?

Which is why it’s been so interesting to see that paradigm shift being dwarfed by the emergence of Generative AI, which is now enabling fashion brands to not just improve on some of the steps of a 3D / DPC workflow, but to potentially sidestep them entirely.

From automating the creation of styling options through iterative prompts, to virtualising and simulating costing, environmental impact, and operational best practices, bringing together AI and DPC tools can help to realise the original (and largely untested) vision for making the full scope of creative and commercial decisions based on a digital representation of a product, material, or method.

But the last few months we’ve also witnessed even more eye watering advancements, with AI and Gen-AI models becoming adept at analysing vast amounts of real-time data, enabling brands to predict upcoming trends with greater accuracy, identifying cultural shifts, consumer preferences, even hyper-personalisation, and able to deliver customised product styles not just in sketch or technical specification form, but as potentially eCommerce-ready images and video.

Another interesting use-cases include Gen-AI Storyboarding, that will analyse a design-brief, and using a brand’s own design assets, to help create exciting new style contents, including the use of backgrounds, layouts, that combined, will help design & marketing teams to visualise the story and at the same time will produce new options in a matter of seconds.

Script-to-video is a prime example of how a Gen-AI development transforms designers’, and marketing team’s workflows. Very soon, we can expect to see CMO’s introducing editors into their teams, that will use the design brief to create marketing scripts to be used to develop videos for downstream use-cases/Of course, there are challenges with Gen-AI video right now, especially linked to the quality (resolution and accuracy remain problematic) ) and detail that we expect from professional production teams, but we need only look back a couple of years at the images AI models were capable of creating – surreal smudges mainly – to see how far the technology has progressed in a very short span of time. It would be naive to assume it won’t progress any further, even if effort is translating into diminishing returns in some cases.

And when those improvements happen in modalities like product images and videos, where might that leave 3D and DPC departments?

To get a better idea of how tangible this impact could become, it’s helpful to remind ourselves not just what AI can potentially do, but how the method of training, deploying, and using AI will influence its outcomes. Because, to stretch our metaphor a bit, while the train as a whole is definitely barrelling towards the industry at high speed, we do still have the opportunity to determine which carriages arrive where – and how.

What’s in a model? Open, closed, LLM and LMM

The most prominent frontier in the AI race: both closed and open source Large Language Models (LLMs) and Large MultiModal Models (LMMs) are readily available at a significantly lower project cost compared to developing and maintaining a private model.

This is perhaps the biggest shift in how AI is sold, bought, and used that has occurred in the last 18 months. Where once we would need to design, develop, train, test, finetune, deploy, refine and maintain dedicated models for very narrow, specific tasks, there are now multiple different routes to rolling out and scaling a new AI initiative that make use of general-purpose pretrained models and the largest infrastructure and cloud architecture providers on the planet. This can be crucial especially for smaller brands or those starting out with a Gen-AI POC (proof of concept) project.

Another consideration will be the access to voluminous and diverse (in a literal sense) data. Open and proprietary LMMs are trained on massive amounts of publicly available data, including text, sound, videos, fashion trends, material science, the principles of carbon impact measurements and much more . This spread can be advantageous for brands both large and small that want to trial AI and Gen-AI projects without acquiring their own datasets, although it can also backfire in the sense that those brands have no control over what data was used in initial training – and while fine-tuning can adjust the output of a model, it remains a concern that the largest public models are trained on potentially copyrighted data and on potentially biased and exclusionary datasets

On the positive side, though, by using shared data to generate innovative unexpected designs, the broader data exposure can spark creative inspiration and help push boundaries beyond the brand’s existing data or style types. Potential examples could include the use of trend data driving new text-prompts that in turn use Gen-Ai to create new concepts, material suggestions, style details, sustainability options, and potentially the optimal manufacturing sources.

Open models can also potentially capture and reflect current fashion trends quicker than relying solely on internal data, which might take longer to analyse and to then be available to update the merchandisers, buyers and design teams.

And by understanding how open models perform, and fine-tuning their output, brands can identify the specific areas where an LLM can be most beneficial and potentially inform the development of a customised model in the future.

So, what are the downsides of using Open source and general commercial models? There is what we call a risk of noise, meaning that retail brands that are sharing the same pool of data could result in similar product concepts, or worst case you could inadvertently use another brands design I.P. (intellectual property), which can lead to I.P. infringement lawsuits, which can be costly and time-consuming to resolve.

Molding your own model

Let’s examine the benefits of creating a closed private LMM model approach Data privacy and control within a private Gen-AI LMM model allow brands to train the model on their own proprietary data, locally on their own network, which can include for example; trend intelligence, design sketches, photographs, product descriptions, customers feedback, etc. This level of control over the training data can help to mitigate the risk of unintentional copying other brands’ I.P. And not only can we use that data for Gen-AI use-cases, but brands could also look at using e the same data to support upstream development and manufacturing, including environmental & sustainability scientific measurements.

Maintaining brand consistency, by training a private Gen-AI LMM model, brands can ensure that the model generates outputs that are consistent with their brand identity and aesthetic DNA. This will be important not only for maintaining brand consistency and protecting brand value, but to ensure that the model can be consistently updated based on your own design strategy. With a private Gen-AI LMM model, brands could also have much greater control over the outputs of the model. This means that they can prevent the model from generating outputs that are similar to those using an open shared LLM, offensive imagery, discriminatory, or otherwise harmful concepts that may affect their brand reputation.

Whatever approach you take, however, the route to success will lie in striking a balance between leveraging Gen-AI models and maintaining human creativity and oversight. Like a lot of professionals and analysts, we predict AI & Gen-AI models being used more as co-pilots or assistants to designers and developers. To obtain the best possible results from AI, and its subcomponents it will be essential to its success that humans are clearly in the driving seat!

Taking AI Image Generation for a Test Drive

We have all been using image verification on multiple platforms on the internet to verify we are indeed human for years now. Along with other manual image tagging and categorisation efforts, the results of that activity have contributed indirectly to this new world of AI language models with image generation, by helping to train and test the ability of computer vision models to recognise the constituent parts of a picture.

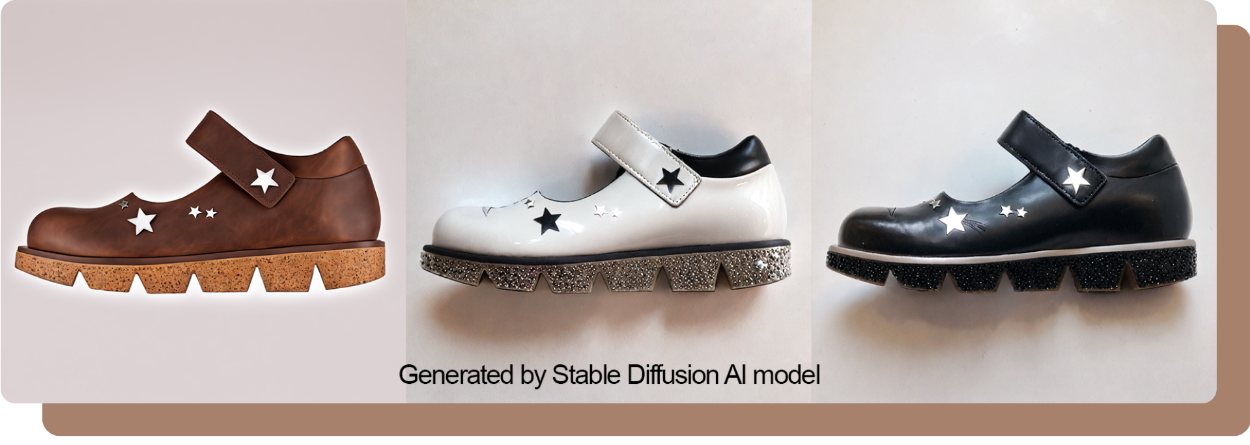

It’s just 18 months ago that AI image generation started taking hold in the public space, and new open-source platforms like Stable Diffusion, and closed-source but API-accessible models like Midjourney and Dall-E began to allow anyone to generate unique and controlled images from simple (or increasingly ornate) text prompts.

During that initial rush, it appeared as though the fields of art and design would be instantly disrupted, and while some measure of that vision has come to life, it’s still a little unclear how those off-the-shelf models are impacting the specific disciplines of apparel and footwear design.

So as part of the process of researching this article, we decided to test their performances as potential design assistants and copilots for fashion and footwear designers, to understand not just how capable these models are in those narrow areas, but also how ready their outputs are to be used in processes further down the line – all the way to production and the supply of fashion products.

Very quickly it became apparent that the Large Multimodal Models used for AI image generation available to the public were impressive in their image quality and potential, but it was difficult to control the details and style, and receive enough consistency from them. This is not a surprise when we consider the provenance of their training data, as we outlined earlier – these models are trained on vast and extremely varied sources such as the internet and image banks.

So, all the images from brands and the huge number of varying designs out in the public sphere, especially from the more popular brands, were influencing the outputs we received, regardless of how well we constructed the text prompts, and as we have touched on previously, raising the risk of IP infringement.

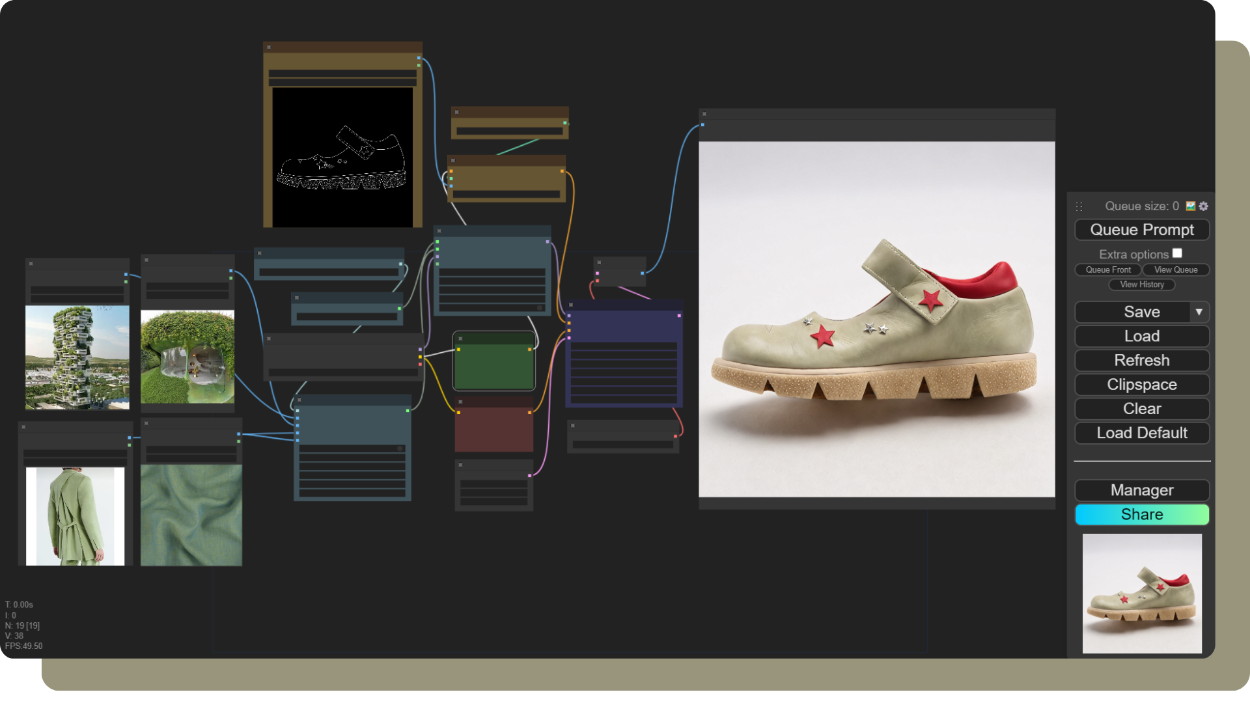

This led us to look at training our own models connected to the existing LMMs. By feeding a collection of our own brand images and text-prompts, we were able to constrain the influence from the outside world to a certain extent, but still received ‘noise’ from the public space via the larger LMMs our fine-tuned models were based on.

The results we received were already viable as a design assistant for designers in the early ideation stage, allowing designers to brainstorm ideas extremely quickly, without having to worry that their initial designs were already infringing on other brands’ IP. But the idea of taking any of these designs further forward was problematic, even if the seed of the idea was sound. So, once a designer wishes to develop more finalised designs with specific materials, design details to present to their clients and manufacturers, our finding was that the current open-source models are not yet ready to deliver as they are.

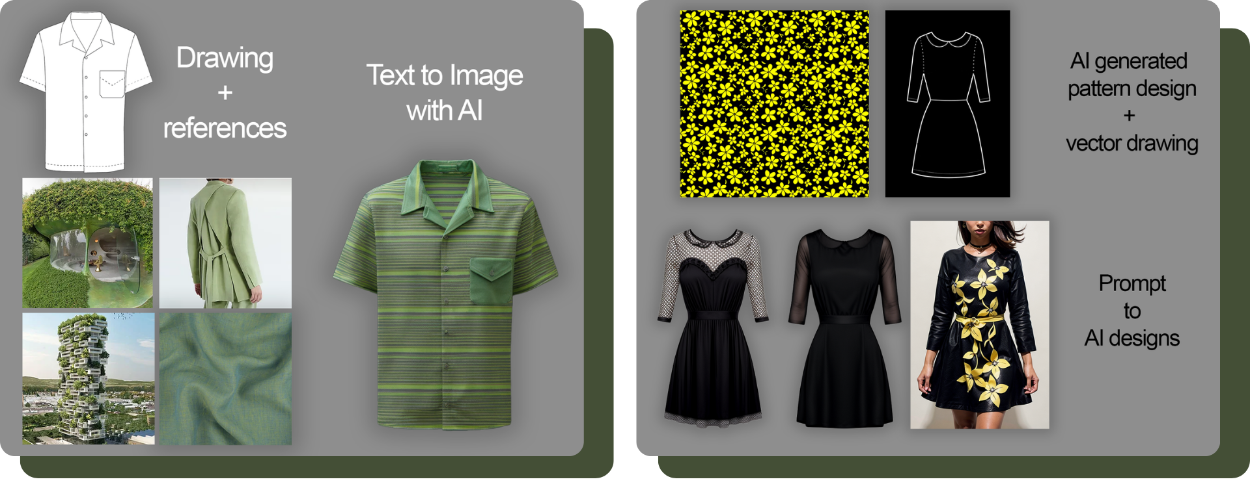

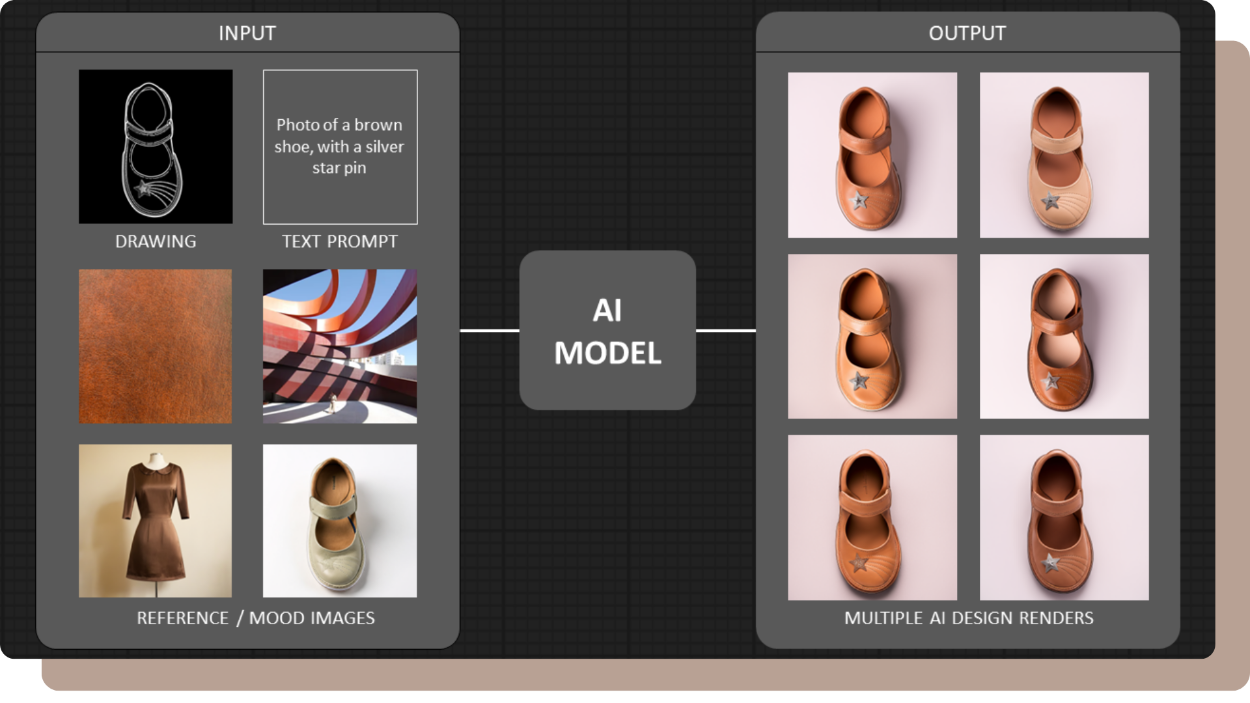

So, by feeding drawings, reference/mood images, and precise contextual text-prompts, through an LMM coupled with one’s own trained models, we explored how brands could speed up the early design process without those concerns. Within minutes a designer can have tens of design ideas rendered in photographic quality images. These can then be fine-tuned, or used for further design work on other platforms.

However, once they wish to develop more finalised designs with specific materials, design detail styling to present to their clients and manufacturers, the current open-source models are not able to deliver as they use general data types and you will require primary datasets.

The solution to this requires development of brand and product specific SMMs, using what we call the design-DNA of a brand. And this is also true with AI in general. As popular as general purpose models have become for – as the name suggests – general purposes, as we move forward, we are starting to see more ‘expert AI models’ being developed for specific domains.

We fully expect the apparel and footwear industries to follow suit here. AI models for certain garment types will not be the same as expert AI models for footwear. The language needed will be different, as will the materials used and the visual elements. Additionally, there will be differences from brand to brand. Which is why brands may need to invest in proprietary models to maintain integrity of their brand identities from design onward.

To help make a decision of that magnitude, though, it will be important to weigh up the potential on both sides.

Model types – LMMs pros and cons.

Pros of using open LMMs in design, development, and marketing of fashion:

Open LMMs are trained on vast datasets of fashion images, trends, and historical data, which they can access immediately, allowing them to generate innovative design options, patterns, and colour combinations that might not be readily conceived by human designers at least not at the speed that is required in a fast moving business, thus enhancing creativity and innovation.

Open LMMs can provide accessible tools for aspiring SME brands, designers, or individuals with limited resources, allowing them to participate in the creative process without needing specialised software or expertise.

Using LMMs can also automate various tasks in the design process, such as generating variations of existing designs, materials, creating design briefs, mood boards, and analysing customer preferences, leading to faster and more efficient development cycles.

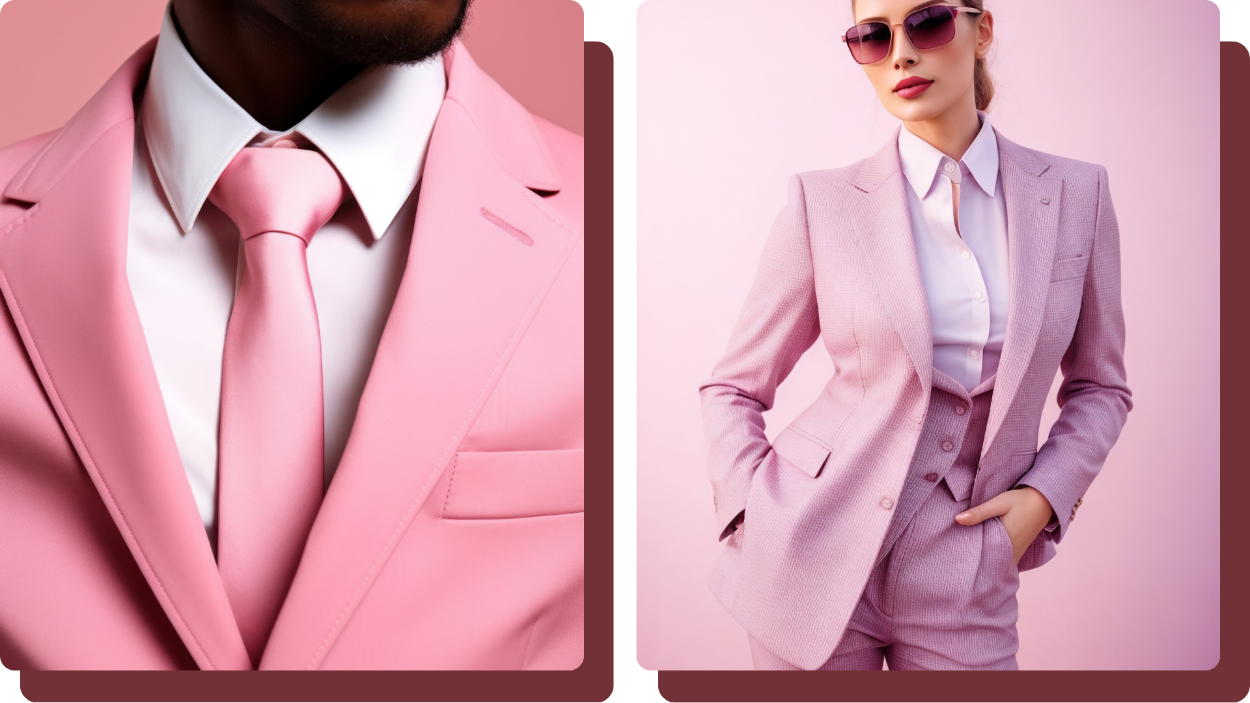

These models can accelerate and empower new ways for marketing departments to message their brand name and products to their customers. The current AI image generation tools already allow marketers to produce quality imagery that is equal to those produced at a photo studio. And with careful use of these tools they can have virtual models wearing or advertising their products. And with the LLMs available like Chat-GPT they can combine imagery with text messaging in line with their brand messaging.

Pros of using closed LMMs in design, development, and marketing of fashion:

LMMs have the potential to create extremely fast renders of new concept designs according to the brand’s style or DNA. All of a brand’s historical styles, sketches, imagery, accessories, materials, etc. can be used as local data that a closed LMM can access. No other AI model will have this access to this data. All prompts and reference images will result in unique imagery that will be in the brand’s identity.

LMMs can personalise the design process by analysing individual user preferences, detailed body measures, and foot measurements, even style and fit preferences, leading to the creation of unique and tailored garments.

LMMs can be used to analyse and optimise material types, identify eco-friendly alternatives, sustainable science based processing, and suggest ways to minimise waste in the production process, contributing to a more sustainable fashion industry.

Since a closed LMM can have access to all local data, such as pricing from different suppliers, if built correctly it could provide accurate estimates on the cost per design in different territories, as well as a sustainability report as mentioned.

Since closed LMMs work only on brand specific data, marketing departments can be confident in generating imagery that is more accurate to the products they are, or will sell. Additionally, brands can create brand owned virtual models/actors that become recognisable, to present their products.

Trends come and go, as do personalised preferences of customers. As time passes the local closed LMMs can be regularly updated to include new data to keep supporting the brand’s departments.

Cons of using open source LMMs for developing fashion:

Despite some of these industry-agnostic (and even industry-specific) benefits, open source and cloud-hosted proprietary LMMs currently have a limited understanding of fashion trends and nuances. While they access vast data sets, and can work from historical trends, they might not possess the same nuanced understanding of cultural context, emotional aspects, and the “intangible” aspects of fashion that human designers bring to the business. With closed LMMs, brands and designers can train models on these nuances, and direct them to achieve the aesthetic results they wish for.

There are also the ethical considerations to take into account. For example, the use of LMMs in fashion raises ethical concerns regarding potential biases in the training data, leading to discriminatory outputs or perpetuating harmful stereotypes.

Over-reliance on these LMM-generated ideas might also stifle genuine creativity that individual human designers bring, and lead to homogenisation in fashion design with less originality.

With AI in general, there is the fear of job displacement. Increased automation in the design processes through LMMs could lead to job losses in the fashion industry, particularly for roles involved in repetitive tasks. However, as we have already stated several times, we strongly believe that the perfect combination of Gen-AI is with humans being firmly in the driving seat, to continuously teach models to deliver on the current strategic design intent.

LMMs, both open source and closed models, are still under development, and their capabilities are indeed limited in terms of generating high-quality, detailed, and trusted designs that translate into actual production-ready garments and shoes. Human influence, editing and final decision making will be needed for the foreseeable future.

Shifting Paradigms, and worries of 3D-DPC Professionals

A shift has already begun from 3D-DPC visualisation to a dynamic, AI-driven design conceptual-ecosystem, potentially creating a leapfrog opportunity for design and marketing teams.

Think about it this way: is the shortest route from an idea to a realistic-looking product visualisation (based on which other professionals can make confident creative and commercial decisions) to build 3D geometry, texture it with a scanned or procedurally generated material, simulate the drape on a 3D avatar, and then pose and render out an image? Or is it to simply prompt the attributes you want and then receive a generated image or video in a matter of minutes?

Imagine a design brief in the future being used as a script to help produce a video that could be used for internal and external marketing, and even for design and developers to better visualise their concepts.

We can foresee that in the future image and video file formats could include a detailed amount of Metadata of every aspect of the visual, including elements such as textures, camera info, lighting, objects and their form in an environment, which the designers to marketers will be able to interact with through prompting. Traditional 2D images or video will now become 3D in nature, from which images, video and even 3D models with textures can be generated for our different needs.

Based on the promotional materials shown, these newer text to video – and even text to 3D models – can also generate subjects with correct perspective, form, and consistency, making us ask the question: what now for 3D and DPC?

It takes many, many hours and skill for humans to create 3D models and render them for design, production and marketing purposes. For the Gen-AI models it takes just a few words and minutes to render out photo realistic images. This new reality carries huge opportunities for brands, and empowers the creatives like never before – as well as creating difficult questions where resourcing and cost are concerned.

Imagine brands owning their own brand and product specific LMMs, where the Gen-AI understands the elements of the design DNA, from trims, accessories, styling, colours, etc, coupled with these exciting text-to-video models – and soon enough the AI could be a fully-fledged assistant for designers and marketers, working at speeds that no human with a 3D-DPC license or design studio can compete with. All it will need is clear direction and foresight to provide results that would previously have taken days or weeks to realise .

There will, no doubt be an evolution of 3D-DPC together with Gen-AI to develop the new workflows – indeed we are already seeing DPC technology vendors promoting workflows that begin with AI, enter the 2D and 3D realm where they can inform manufacturing with accurate imagery for production. But the driving engine to the product creation workflow of the future is likely to be Gen-AI image generation.

Unlike when 3D-DPC entered the industry, the brands now have the opportunity to direct this evolution. By investing in training, and building in-house tools, brands will gain the most efficiencies and build the correct workflows, utilising their resources effectively.

Future off-the-shelf design tools based on 3D-DPC will not offer such benefits, as they will most probably be based on open-source models. They won’t be tailored on brand specific data. AI development is moving too fast for them to cater for the number of brands – each with their own nuances and design DNA, and each with its own market position to occupy.. Which is why we believe brands need to look at how they take ownership of their workflows and tool building.

The industry has already connected design with materials libraries and sustainability strategies. This is a process that consumes time and many work hours, even with the help of other platforms such as PLM and ERP. The brands that invest in their own Gen-AI models with their own closed data sets, will be able to design with the sustainable goals and materials libraries in consideration automatically, as it will be able to access their data in real time as it renders out design and marketing imagery.

Beyond Design

AI image generation will alter the structure and requirements of not only the design departments, but within marketing departments as well. Even more creativity will be needed to direct the AI to create the most effective messaging both textually and visually – leading to an exciting opportunity for individuals interested in sales and marketing fashion and footwear brands, as their needed skill set has just been expanded.

As more LMMs develop, the more marketing departments will be able to rely on visuals generated by AI, as the models will deliver better accuracy and specificity. The response time and flexibility will greatly improve for those departments that utilise AI fully in their toolkit.

We recently witnessed a huge leap forward in text-to-video with Open AI’s SORA and Alibaba’s EMO. In basic terms, the AI is now able to combine its understanding of language (currently English, but very soon all languages) and new knowledge of imagery, form, and motion to generate videos and animations in high quality.

Brands will need to redefine the skills and qualities needed in their sales and marketing departments. AI image, video and even 3D model generation will be extremely fast, that marketing brands will need to staff people in-house with the traditional skills found in design and film studios, and who know their brand identities, to make those quick decisions and direct the AI for the best results.

In the online world we already live in, and armed with these new tools and skill sets, sales and marketing will be able to showcase new designs and offer customers choices before proceeding towards manufacture more effectively than current methods.

We can imagine a design going from conceptualising, to a video of that product, to being viewed on social media, with positive or negative response, forming a brand’s decision to proceed to manufacture or not within a day, if not hours.

Which means the departments from design, to product development, to sales and marketing, will need to be communicating and in tune with the AI assisting them at speed.

The pencil revolution

Back in the early 1980’s before companies like Adobe were incorporated, we witnessed what we called the pencil revolution. Up until this time designers had drawn their garment and footwear sketches by hand, or hand painted material prints, stripes, etc. Over the next few years, we taught designers how to use vector sketch tools like Micrografx Designer, and Coreldraw. Today Adobe has become the prominent designer’s choice when it comes to the Apparel and Footwear industry and is also leading the charge in Gen-AI.

Time to embrace change!

The fashion industry must continue and encourage a shift in mindsets, positioning Gen-AI not as a threat but as an opportunity that can help the industry to revolutionise and elevate traditional roles. We need to think of Gen-AI as our personal assistant, or co-pilot.

It is true that Artificial Intelligence is a disruptive technology, and by definition will replace existing processes and jobs, as previous disruptive technologies have. But unlike other historical changes, Gen-AI and AI will empower brands and designers, from small to large, as the cost of design up to manufacture will reduce dramatically, releasing resources to develop and deliver more variety, and reduce inherent waste in our current processes.

The critical role of human model ownership

We have already emphasised the symbiotic relationship between Gen-AI and human creativity, underlining the importance of human input in maintaining authenticity and aesthetic standards. We have also highlighted the unprecedented opportunities for professionals and businesses willing to embrace Gen-AI in their 3D-DPC modeling workflows.

Businesses should be encouraged to think strategically about integrating Gen-AI into their complex workflows, using AI & Gen-AI to deliver innovation that can lead to competitive advantages and new industry standards. Beyond your own businesses it will be important for you to collaborate and adapt in the face of evolving technologies, fostering an industry-wide culture of collaboration and innovation. We are shifting quickly from closed platforms to open-ecosystems that, when combined, will deliver exciting results.

To deliver in this direction, we will need to re-educate, train and upskill the workforce in AI & Gen-AI-related skills, empowering professionals like designers and data-scientists working together in navigating the evolving landscape with confidence. Our marketing teams will need to learn how to direct and produce new video content. Let’s consider the evolving collaborative partnering and learning opportunities within the Gen-Ai ecosystem community, fostering an environment where insights and experiences are shared to collectively adapt to Gen-AI-driven standards.

In conclusion

Whilst the AI hype continues, businesses, particularly brand owners and decision-makers, are rightly asking themselves how to turn AI to their advantage – and how to anchor it into their overriding strategic objectives. . But it’s important to remember that the current applications of generative AI are also just the tip of the iceberg: from multi-model-databases, AI LMM models, to self-improving agents linked to connected wearables, decentralised applications (dapps), the fashion ecosystem is in rapid change-mode at both the strategic and tactical levels..

As we see it, embracing AI is not just an option but a strategic necessity – a foundational piece of building a roadmap towards a future where innovation, creativity and efficiency can coexist.