Key Takeaways:

- Chinese AI startup DeepSeek has launched its free, open-source R1 model. This reportedly took a fraction of the cost and computing power to train compared to models from existing AI giants like OpenAI, Google, Anthropic, and Mistral. And DeepSeek also costs considerably less at the point of inference. This wiped a massive amount of value off the shares of key tech companies – especially NVIDIA.

- While a storm is brewing around ownership, data protection, national security and a lot more, the biggest takeaway is that brands and retailers now have a much better shot at hosting their own models instead of relying on the aforementioned companies – theoretically allowing them to keep control of data governance.

- Robotics startups working on foundational models are still attracting substantial investment, and accelerated progress that could benefit fashion’s supply chain might be seen sooner than expected.

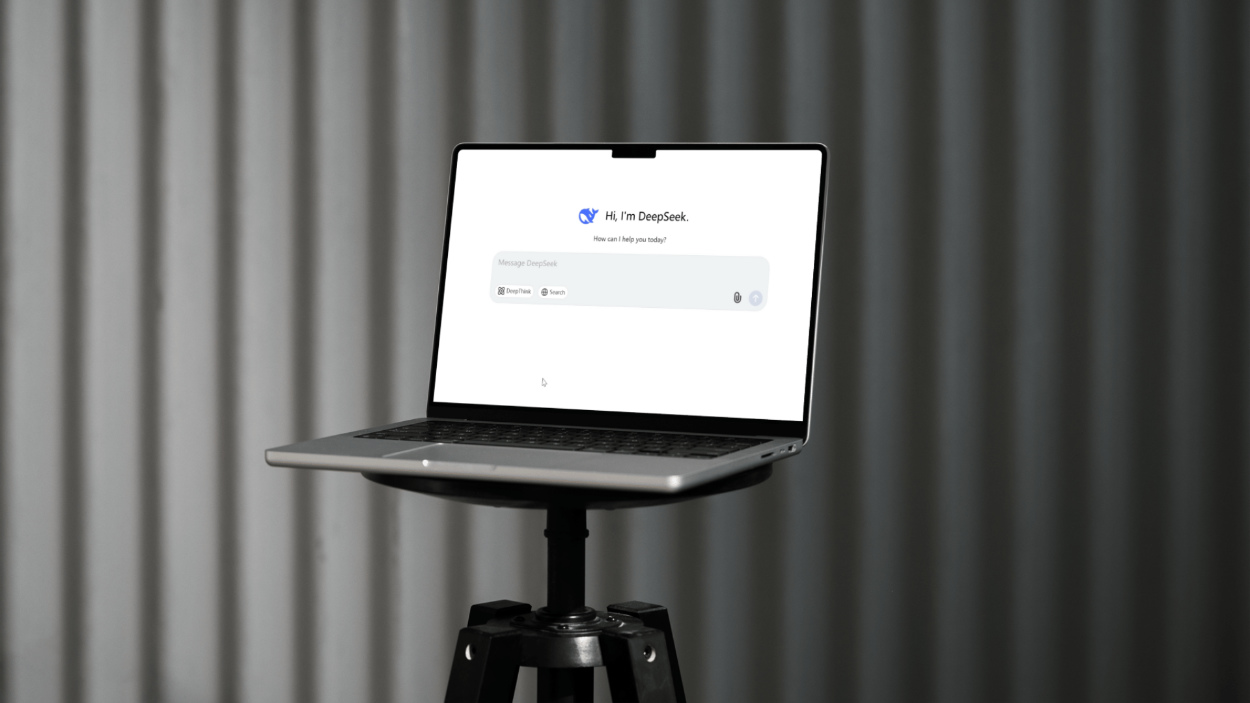

This week, the world became suddenly – and, depending on where you stand, painfully – familiar with a one-year-old, Hangzhou-based startup called DeepSeek.

Founded by hedge fund manager Liang Wenfeng, DeepSeek released its R1 model this Monday, alongside a comprehensive research paper detailing how they created the large language model (LLM), which sent shockwaves through the AI establishment. That surprise came about because DeepSeek – as packaged in the company’s own chatbot app, which became the top download for iOS users in just a couple of days – seems to have similar capabilities to ChatGPT but, according to its creators, it took just $5.6 million to train, while other well-known systems’ training runs have cost into the billions. The DeepSeek team also claim that they used 2,000 Nvidia H800 GPUs – a less advanced chip originally designed to comply with US export controls – instead of Nvidia’s A100 and H100 chips, which are used by most US AI companies.

If you’re not deep into the weeds on AI, the magnitude of this might get a little lost, so it’s worth framing it in context: new generative AI models are wildly expensive to create and incredibly costly to run. The pathway to profitability for companies like OpenAI is still pretty unclear because of this imbalance between money out, from research and infrastructure, and money in from subscriptions and API calls.

Now, if DeepSeek’s assertions are true, the vast resources and computing power that figures like Sam Altman, Mark Zuckerberg, and Elon Musk have deemed essential for AI – and for maintaining America’s leadership in the field – may prove to be overstated, or at least based on faulty architectural assumptions.

Nvidia (the company that has benefitted the most, in pure monetary terms, from the AI boom) took a serious hit following DeepSeek’s release. After achieving a $3 trillion valuation spike, as tech companies scrambled to secure its chips faster than they could be produced, the company’s stock plummeted 17% on Monday, wiping out $589 billion in market value and earning them the largest single-day loss for any company in history.

Even so, Nvidia appeared unfazed, telling Bloomberg that DeepSeek’s model was an “excellent AI advancement” and a testament to unexpected innovations in how new models can be developed.

In case you were wondering, reinforcement learning appears to have been one of the unlocks for DeepSeek – at least according to the company’s own messaging. DeepSeek used a large-scale reinforcement learning focused on reasoning tasks, as opposed to OpenAI’s supervised and instruction-based fine-tuning approach. DeepSeek also used distillation to compress capabilities from large models (the full-scale DeepSeek R3 has more than 670 billion parameters) into models as small as 1.5 billion parameters. This is not novel, but it is a key part of, for want of a better word, “compression” of language models.

At a fundamental, technical level, nobody is disagreeing with anything the DeepSeek team is saying. The universal sentiment is that the company has, one way or another, found a way to dramatically cut the cost of building and running models that end up at or near the frontier when evaluated against common benchmarks.

On a few different levels, though, the situation is anything but clear-cut – and the popularity of DeepSeek (the app) has quickly dredged up long-swirling concerns around intellectual property, national security and trade policy, data security, competition, and much more.

On Wednesday the 29th of January, for example (just days after R1’s release) the Irish Data Protection Commission confirmed to TechCrunch that it had reached out to DeepSeek, seeking clarification on how the company was handling the data of Irish citizens. “The Data Protection Commission has contacted DeepSeek to request information on the data processing practices related to individuals in Ireland,” said a spokesperson, who declined to provide further comments.

That letter from the Data Protection Commission came less than a day after a similar inquiry was issued by Italy’s data protection authority. DeepSeek has not publicly addressed either request, and its mobile app has since been removed from both the Google Play and Apple App Stores in Italy.

If the spectre of TikTok just emerged in your mind – you won’t be alone. Western skepticism around the data governance practices of Chinese tech companies has been at the root of this year’s biggest diplomatic / tech battles.

For now, though, DeepSeek’s apparent breakthrough is changing the tenor of the discussion around AI deployment. Companies who have been reluctant for an AI company to have access to all their data (even if it’s not explicitly used to train models) may be able to self-host sooner, and at a lower cost than anyone expected.

Currently it sounds like running DeepSeek 70 billion parameter “small” model locally will require a combined 100GB+ of RAM or VRAM. This is well within the confines of consumer grade hardware – albeit at the expensive end. Running the full DeepSeek model would, quantised, would require more serious GPU power. But one of the smaller versions – used as is or fine-tuned – could be much more manageable while still preserving brand-specific customisation, privacy, and managing dataset curation. Of course, it will also be possible to run DeepSeek’s models by using an online platform like RunPod, and services like Hugging Face will be updated as the models come online with inference providers.

But with the architecture out of the way, we get into the truly circular and ironic part of this story. DeepSeek, you see, sometimes misidentifies itself as ChatGPT. There are several possible explanations for this. In the worst-case scenario, it was directly trained on ChatGPT outputs – a form of model distillation that OpenAI is already decrying as a violation of its terms of service. A less damning possibility is that it was trained on large-scale scraped internet data, some of which may include ChatGPT responses or discussions about ChatGPT. The most benign explanation is that DeepSeek is still predicting the most statistically likely response. If it has seen enough references to ChatGPT in the context of language models, it might simply infer that’s what it is when asked.

Whichever way, it’s probably safe to say that charitable feeling is in short supply where AI companies are concerned, with many – especially in creative communities – feeling as though models from OpenAI et al were already built on a foundation of uncompensated creative theft. There is certainly some deep irony that’s going to be mined here over the coming weeks.

But however the conversations around data security and IP shake out, the firm belief is that the market is going to make the ultimate choice, and that it will lean – as it usually does – towards price as the primary variable. As Reed Albergotti writes in Semafor this week: “It’s all about the tokens. Whoever can satisfy the insatiable market desires with the best capabilities at the lowest cost and latency is the winner.”

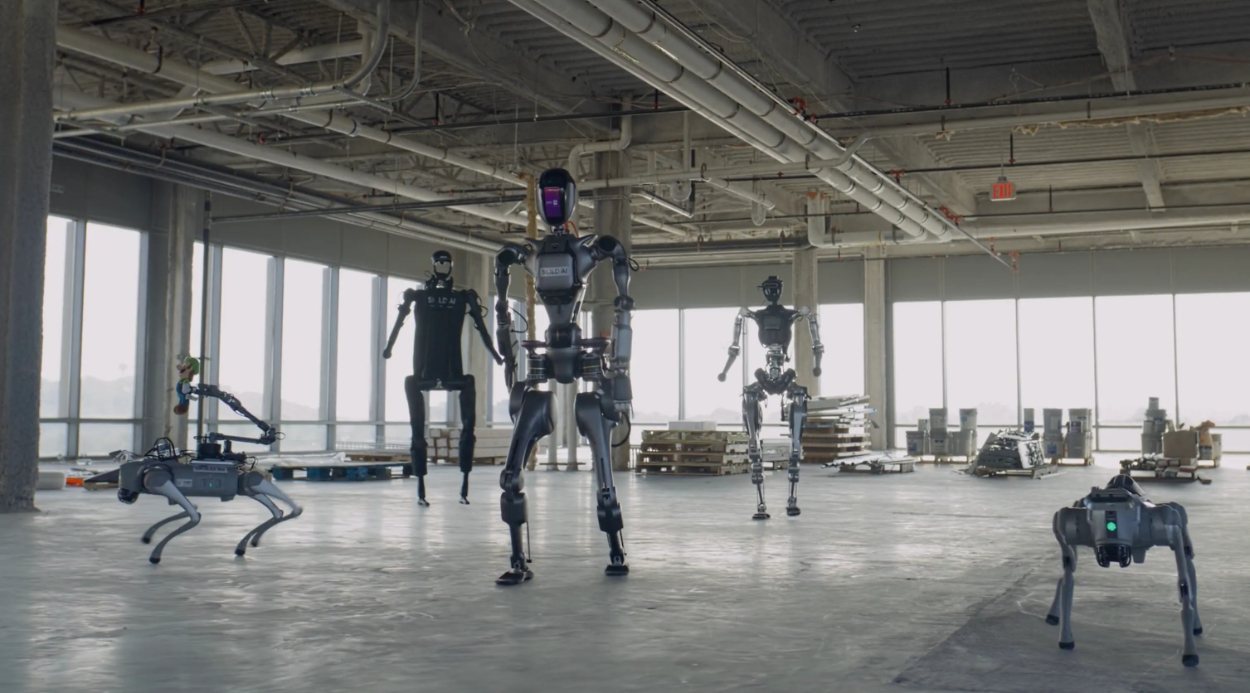

This might end up being the case too for robotics. Also this week, Japanese investment company SoftBank is in talks to lead the funding of $500 million into Skild AI, which is developing a foundational model for robotics, according to Bloomberg. The report also indicates that the Pittsburgh-headquartered company could reach a $4 billion valuation during this fundraising round – quite remarkable considering the startup was founded just two years ago.

And fresh on the heels of that update, UC Berkeley spinout Ambi Robotics announced the arrival of AmbiStack: a robotics system designed to automate the process of stacking items onto pallets or into containers achieving maximum density.

AmbiStack integrates two essential warehouse tasks into a unified process, both picking and stacking. These tasks are labour-intensive and have led to injuries among human workers in the past. Previous solutions, like forklifts, brought their own safety concerns to the workplace. In contrast, AmbiStack eliminates the need for human labour at these critical points in the logistics chain. Ambi Robotics is likely hoping to follow the example set by its robotics counterpart, Covariant, whose technology has already been incorporated into Amazon’s automated systems.

Clearly, there is a lot of money being thrown at robotics startups that focus on foundational models. Alas, for AI companies following the same path, DeepSeek’s breakthrough may signal the end of that golden age. But the intersection of AI and robotics and how they could help fashion’s future supply chain is still nascent and is still very much something to watch. And who knows, we could see major progress sooner than expected.

Best from The Interline:

Kicking off this week, was the Founder of CLO Virtual Fashion Inc. on implementing digital product creation strategically, balancing tech innovation with industry-wide transformation, and bridging the physical-digital fashion divide.

Next up, our guide to SOURCING at MAGIC’s upcoming event. Next month will see North America’s largest gathering of fashion professionals, brands, suppliers, and technology providers, connecting different perspectives to create a positive, lasting impact.

And closing out the week, Emily Steinke, footwear designer and co-founder of DUO Design Studio explores the persistent business case for replacing physical samples In fashion footwear.