Key Takeaways:

- The Interline has always assumed that a big fashion or beauty brand would eventually raise a copyright case against a major generative AI company, but two legal decisions this week have cast doubt on what seemed the most likely avenue.

- Those judgments do, however, leave the door open for companies that are able to demonstrate that generated images dilute the market for real products – something that might be feasible in a more roundabout way for fashion.

- As the frontiers around AI and IP are being redrawn, brands will need to ask themselves whether they should be more concerned about the images that are being used to train AI models, or the tools that are being put in the hands of consumers to erode the intangible visual value of wearing something to be photographed in it.

Meeting The Moment – join The Interline and Lectra for a live webinar on 3rd July

On 3rd July, at 2PM London time (3PM Paris; 9AM New York) Ben Hanson, Editor-in-Chief of The Interline, and Maximilien Abadie, Chief Strategy Officer and Chief Product Officer at Lectra, will join a live, open-access webinar, to discuss and debate fashion’s long history of change, why the current moment is unique, and how the mandate to connect previously isolated domains – from design through production and into marketing – will define what it means for fashion and luxury brands to thrive.

Anticipated legal protections against AI training are starting to show cracks, raising the bar for any potential action from fashion.

Several times in the last year or so, The Interline has predicted that fashion (or beauty) brand lawsuits against one or more AI companies were likely, and that the first of these would be coming down the courtroom pipe sometime soon.

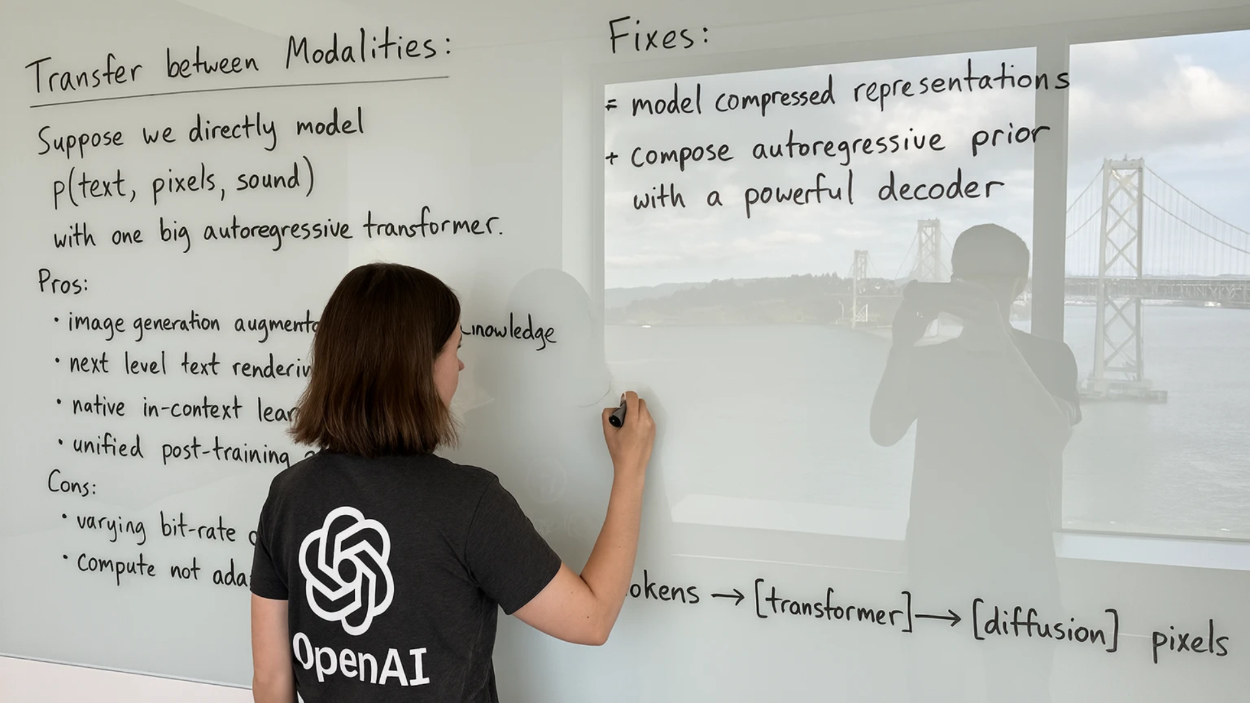

The reasons for this should be clear to anyone who has experimented with generative image or video models, or who’s made them part of their workflows in concepting, design, or marketing. In the early days of image-gen services like Midjourney, nondescript prompts for things like sneakers or handbags would routinely produce images that contained recognisable marks, logos, design signatures, or combinations of all three.

This was, unambiguously, a result of how those big, general-purpose models were trained: on the aggregate visual output of essentially the entire internet, which included fashion eCommerce photography, look-books, campaign shoots, street style, and a gigantic raft of social media content in which the world’s biggest lifestyle brands feature prominently.

Without putting too fine a point on it, the initial explosion of generative image models had fashion’s visual lexicon baked into it – along with the history of more general photography, illustration, film, fine art and much more.

For a period, the same image generation services then attempted to restrict these recognisable outputs, not by retraining the models (an expensive process at a technical level, let alone when we consider the logistics of trying to remove a particular brand from a corpus of data this vast) but by blocking the prompts. During this time, asking an AI to generate a Gucci crossbody bag, for example, would (most of the time) have returned a “I can’t help you with this” message, even though the model still retained the capability to do so, and even though the underlying training data had not changed.

Then, roughly coinciding with the launch of the GPT 4o native image model, released this March, the protection walls officially came down. Unlike prior models (or at least the frontend applications that interact with them) the native GPT 4o model would happily generate pretty realistic celebrity likenesses, fake brand campaigns, nearly-there film stills and plenty more.

We wrote about this newly-permissive approach to generative AI at the time: when the world was fixated on the idea of Ghibli-fying themselves, people also observed a more worrying undercurrent. People were certainly having fun with that phase and the considerably-more-irritating “action figure” one that followed, but behind the scenes there was a clear message that platform holders who had erred on the side of caution when it came to allowing people to play around with famous visual signifiers now felt insulated in some way from any potential blowback.

And that permissive era is still where we are a few months later. Users who feel like generating images that approximate (sometimes to an extremely finite degree) existing artists, creators, or brands have free reign to do so, without needing to resort to jailbroken local models. The generative AI space is currently an IP free-for-all.

Needless to say, the media giants of the world are taking notice. Disney and Universal filed a suit against Midjourney just under two weeks ago, with their 110-page complaint showcasing numerous examples of where the output of Midjourney appears to be a nearly (but not quite, with that distinction mattering, as we’ll see in a moment) 1-to-1 replica of still frames of Disney or Universal films.

The argument in that complaint does not require anything like 110 pages: it is, distilled, that generative AI image models are “a virtual vending machine, generating endless unauthorized copies of Disney’s and Universal’s copyrighted works…”. And tied to this claim about the output of image gen models – explicitly Midjourney, but implicitly others – is the notion that those same models must have taken Disney and Universal works as inputs.

This is similar to the core notion behind the suit that the recording industry body RIAA brought against generative audio services Suno and Udio around this time last year. That case contends that those services would not be able to produce songs that sound an awful lot like Johnny B. Goode, if the Chuck Berry original was not part of their unlicensed training data.

On balance, the maxim is that Disney does not tend to wade into litigation lightly, so the very filing of this case added a kind of social heft to the idea that things were not going to go the AI companies’ way. (A similar suit between the New York Times and OpenAI, which makes the contention that the company’s GPT models can reproduce verbatim passages of NYT articles, which must mean they were trained on and somehow “hold” copies of those works, also looked like a bellwether for the same reason.)

So until this week, the feeling has been that, if fashion did decide to wade into the AI copyright battle, that litigation would likely go the way of the “Metabirkin” case. That suit has been dissected ad-nauseum by publications with much more legal expertise than The Interline, but the crux was that Hermés – creator of the Birkin bag, named for actress and muse Jane Birkin – was able to demonstrate that the creation of NFTs that appropriated and remixed its iconic silhouette represented an infringement on the brand’s trademark rights.

Notably that was not a counterfeiting case (the Metabirkins were, as the name suggests, not physical goods) and neither was it a pull at the frontiers of the parody defense that served artists like Andy Warhol well (until they didn’t), since that latter argument was rejected early in the case.

Instead, the Metabirkin case came down to central ideas of consumer confusion and the blurring of trademarks of trade dress (a definition that captures distinctive colours, logo elements, product silhouettes and attributes, and more). That suit pivoted on the finding that, while a brand might not be directly tarnished by someone using its trademarks or trade dress in an unauthorised way, that usage can still serve to make the original mark less exclusive or less distinct.

Or, to put it another way, the existence of virtual approximations of a real brand and / or its products can have a measurable negative impact on the market position of that brand.

That was a landmark case at the time fashion was lost in NFT fever (an odd period to look back on!), but in retrospect the scale of the risk there – even during the peak of the NFT hype cycle – was small compared to the open-season on intellectual property today. Generative AI is, obviously, a completely different class of technologies, but it’s also a fundamentally novel proposition in the sense that, rather than being a discrete collection of digital objects, it’s essentially putting the power in users’ hands to cause some of the same category of trademark damage that the Metabirkin case traded in.

The other key difference is that the NFT case centred on the NFTs themselves, not the technology layer underneath them, or the materials used in their creation. So far, the cases brought against generative AI companies have focused on the act of training models on copyrighted works without license – an act which is then manifested in the output of the models – rather than going after the outputs themselves as infringing articles.

And from that vantage point, prior to this week The Interline would have guessed that fashion brands were in with a shot. Fashion look-books, campaign shoots, runway photography etc. are all copyright-able works, and if an image model ingested those works during training and then created images that seemed clearly derivative of, or that remixed, the originals, then brands could at least mount an argument. (We understand that AI does not remix or derive, but the principle transcends the technicalities.)

This week, though, the question of whether that kind of ingestion for training constitutes “fair use” (and is thus protected) was tested in two separate court cases, and neither went in the plaintiffs’ favour.

First, Anthropic (the research lab and product company behind Claude) prevailed over a class of authors who claimed that Anthropic had pirated copies of their books to help train Claude-series models. In that case, the judge ruled that training was sufficiently “transformative” that it fell under existing fair use definitions for enabling creativity and “scientific progress”. (As it turns out, Anthropic did pirate many books, and the company will be open to damages for that act, but it also purchased and scanned millions of print books legitimately.)

Second, a similar judgment was handed down in favour of Meta (the company behind the Llama-series of AI models, as well as, well, Facebook, Instagram, Threads et al) which landed in a similar place: that AI training is considered “fair use”.

In both cases, the bench was careful to say that these judgments do not set a precedent that all AI training is lawful, but it’s difficult to read their coincidence any other way – especially when we take account of Wednesday’s reporting that image library Getty had dropped two key claims in its suit against image gen company Stability AI, and that it would no longer be arguing anything to do with training on copyrighted works, or on the similarity of AI outputs to original photography.

(Getty are instead arguing that the models themselves, when they are “imported” into the UK, contain infringing articles the same way that someone smuggling copied artworks would be. The outcome of this will be extremely contingent on the company successfully establishing that models really hold copies of photography in a retrievable form, when in reality they store them as closed-book weights in floating point math, if they can be said to “store” them at all.)

So on a “vibes” basis, we’re now in a rather different place to where a lot of industry analysts thought we might land. But although the outlook now seems unfavourable for any company trying to mount a “training is not fair use” argument, the Meta case left a noteworthy door open, pointing out that the claimants in that case simply had not done enough to demonstrate that the output of AI models would actually dilute the market for the original works.

This is now the question at hand: is having an AI summarise a book, or create images that look like stills from a film, a direct substitute for reading that book or watching that film? And, as a consequence, will the ability of AI to generate, say, fashion campaign photography, lead to lost revenue for fashion companies?

This might seem like a silly question for any industry that trucks in physical product. After all, outside of licensing arrangements, fashion “sells” lifestyle images only as an ancillary element of a wider brand story that’s intended to lead people to purchasing real, tangible objects. It seems counter-intuitive to consider that giving people the ability to generate images could erode the market for the things those images depict.

In reality, though, there’s a chance that this could actually turn into fashion’s best defense. And there are two stories from this week that add some weight behind this idea.

First is the story that eCommerce mainstay Asos is now “banning” what reporting refers to as serial returners – i.e. shoppers who buy multiple products and then return some or all of them, and then repeat this behaviour. The consumers interviewed for that story claim that fit-testing is the primary root cause of that pattern, but it has become common knowledge that a decent-sized cohort of people purchase clothing to wear it once (leaving the tags in) in order to be photographed in it, and then return the garment afterwards.

Implicit in this way of shopping is the idea that clothing, rather than being a purely tangible physical good, has an ephemeral visual value that consumers want to acquire – one that they then express in images, before divesting themselves of the item if they don’t intend to use it again. And on that basis, it’s logical to guess that certain groups of people would almost certainly generate images of themselves wearing styles (famous or otherwise) rather than buying them – thereby directly diluting the market for the original product.

While this applies to actual products that a company has made and shipped, it’s a short hop to applying the same principle to AI-generated approximations of a brand or designer’s identity that are not tied to any real product, but that still scratch the same itch.

And yesterday also saw Google – probably accidentally – wade into the fray, releasing its new AI-powered virtual-try-on app Doppl in the United States. The Interline is UK / EU based, and so we have not been able to test the app, but according to the media released alongside it, Doppl is different to Google’s pre-existing Google Shopping VTO feature. The latter seems to be built with brand and retail partners, while Doppl is expressly designed to allow online shoppers to try on literally anything they can find a photo of:

“With Doppl, you can try out any look, so if you see an outfit you like from a friend, at a local thrift shop, or featured on social media, you can upload a photo of it into Doppl and imagine how it might look on you. You can also save or share your best looks with friends or followers.”

Google has not disclosed which models run behind the scenes of Doppl, but there’s a thread to be pulled here from the Midjourney case right the way through. If shoppers can visualise themselves wearing anything in the world, and then share those visualisations (Doppl has sharing as a prominent feature), then are those shoppers going to buy the clothes in greater numbers than they are likely to never make it to the checkout?

Right now, none of this is integrated into the design and development pipeline. But as AI systems do find their way into fashion’s workflows more deeply, it’s going to become important for the industry to ask itself whether the legal concern around AI isn’t about the images that already exist, but about the new ones that are about to be created in potentially massive volume.