This story is a collaboration between The Interline and Make The Dot, an AI-native, non-linear workflow platform designed to help fashion teams turn ideas into real products efficiently, with end-to-end alignment. Together, we explore how operational AI can deliver a major difference in the effectiveness, speed, sustainability and efficiency of concept-to-sample workflows, and make a measurable net positive impact on product outcomes, where traditional technology initiatives and brute-force strategies have fallen short.

Spend any amount of time researching AI for fashion, and you’ll be inundated with image generators and applications that promise endless early-stage inspiration and an ongoing drip of creative fuel. And from that exposure you’d be forgiven for thinking that fashion’s most pressing issue was a lack of ideas.

In reality, very few fashion companies are creativity-constrained. For companies that hire the right talent, inspiration just is not in short supply. Instead, what most brands have in common are operational setups and technology stacks that let an alarming amount of that creativity leak out on the route from concept to sample – being steadily sapped by material and process waste, redundant work, low adoption rates, cost uncertainty, miscommunication, and misalignment.

As unique as they all are at the brand and cultural level, every fashion company, irrespective of size, category, or market position, follows a predictable go-to-market cycle of inspiration, concept development, visualisation, and communication, presentation, and sharing. And it’s during this journey that the best intentions, and the best-defined product outcomes (whether those are measured in margin, performance, fit, trend acuity or a range of other potential metrics), get lost in a linear sequence of iteration, revision, cross-functional input-gathering, iteration, and reconciliation – little of which happens in the same place.

As it stands today, every non-standardised and non-systematised step that stands between an idea and a prototype imposes its own tax and adds its own overhead. Those overheads can be measured in time and effort: the average brand expends a lot of both in refining, presenting, and visualising concepts that do not see adoption, all while the window for success is shrinking. And they can be measured in pure cost: every salesperson sample created has its own bottom-line impact, at a time when raw input costs are on an unpredictable upwards trajectory. They can also be counted any number of other ways, from environmental impact to sourcing strategy.

As preoccupied as fashion, on aggregate, is with how its products and stories are perceived by consumers, the more pressing challenge is how it positions those same things for the internal audiences, upstream partners, and retail buyers who need to buy into them in order for the product to succeed. And alignment and operational efficiency – not greater throughput of raw ideas – are the two currencies the industry actually needs.

So far, fashion has had three levers to pull when it comes to reducing the distance and the friction between concepts and samples (both digital and physical): off-the-shelf, industry agnostic software; fashion-specific software; and increasing headcount. And as we’ll see, none of these have massively altered typical lead times, adoption rates, profitability, or sample-to-style ratios – while many of them have introduced their own challenges and lock-ins.

The most obvious manifestation of fashion’s reliance on generic tools is the industry’s extensive use of freestyle, sector-agnostic whiteboards like Miro and Canva. These have provided designers and development teams with an unconstrained collaborative canvas to work from, and easy access to automations (like background removals and cut-outs), but those turnkey features have come at a cost.

Inspirations and assets captured in these platforms do not carry metadata or sources with them, and neither do these boards connect to external material or colour libraries. And as, over time, product teams have placed more orphaned objects in them, and essential debates and decisions have taken place in the comments and margins of countless forked and versioned boards, these platforms have become islands of disconnected data that lead to high switching costs and the very real risk of lost information.

Although fashion-specific solutions, like 3D design, simulation, and visualisation, have put more powerful and better-tailored tools into product teams’ hands, allowing them to create and share virtual samples, the skill ramp and time investment required to take effective advantage of these can be high. And 3D design and pattern development has also tended to be a solo activity, with 3D users required to convert their work into shareable formats before colleagues can take creative or commercial decisions made from them.

In both cases, the tools themselves can also create an administrative burden unless they are directly integrated into enterprise platforms. As a result, even in organisations that technically have PLM as a core system of record, the volume of work happening in other locations leads to the need for manual entry of style details, product descriptions, and other data. And even the most mature deployments are still surrounded by a point cloud of other spreadsheets and solutions.

And increasing headcount, on top of being far down on the list of executive priorities, has the potential to scale throughput and volume, but is unlikely to fix the leaks in the concept-to-sample system – as well as introducing more gatekeepers and increasing the likelihood of teams that sit next to one another in the product journey working from incomplete information.

In this context, it’s no surprise that fashion has been receptive to the idea of AI as a way to automate, streamline, or scale one or more steps of that concept-to-sample journey. But similar to the broad technology journey, it currently seems as though general purpose AI models and applications introduce many of the same challenges as generic non-AI software, while sector-specific solutions introduce their own overheads.

An open image generation and manipulation model like the Nano Banana iteration of the Gemini image model, for example, can offer creation and edit capabilities, but it’s unlikely to meet the required bar for accuracy and consistency where products are concerned. For other general models, the ability to edit images is either blunt and limited, or missing entirely – as well as introducing copyright concerns and a surprisingly steep learning curve for effective prompting and use.

And even with the best AI applications, the stated value and purpose of the solution doesn’t always correspond to the fashion industry’s actual challenges – many solutions are still calibrated for scaling-up inspiration, but without anchoring that inspiration in either producible reality or in real, day-to-day design-to-production workflows where accuracy and alignment matter more than acceleration.

All of which is creating a gap for a different kind of platform – one that’s built with AI at the core, that’s industry-specific, that packages up the intuitive capabilities and collaboration layers that end users value from off-the-shelf tools, and that unifies the different stages of the design and development journey in a single location.

And in fact, some of the fashion world’s most prolific companies, in the full-service models where product design, development, and production happens on behalf of the planet’s biggest brands, are already proving out the differential value of that kind of platform.

Powerhouse producer Li & Fung, for example, has successfully used the AI-native workflow platform Make The Dot to shorten its development cycles (speeding up the concept-to-sample workflow by 80%), to dramatically cut the distance between idea and presentation by generating photorealistic garments from boards (with line sheet development time reduced from a full week to less than a day), all with the net output of increasing adoption rates by almost a quarter.

And one of the largest, most historic, and best-connected apparel manufacturers in the USA has also employed Make The Dot to centralise inspiration capture and collaboration, quickly translate concepts into photorealistic CADs, and then present those renders to partners – all while also relying on source and metadata handling to reduce manual input and automate the tagging of attributes for use in enterprise systems.

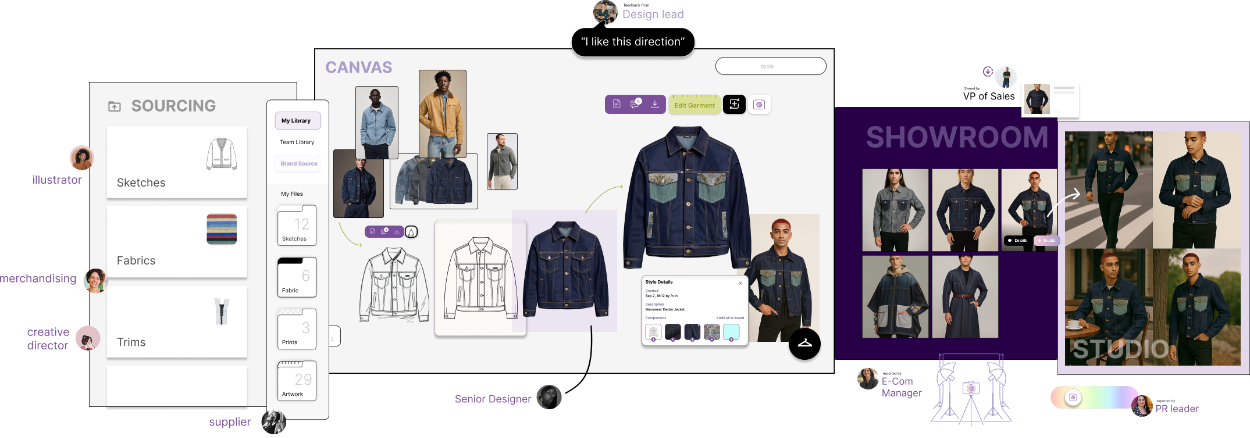

To help these and other major enterprises unlock these benefits, the team at Make The Dot started by acknowledging the limitations of both existing, multi-tool ecosystems, and the narrow frameworks that have governed the way AI has been adopted – as well as the constraints of the models themselves. While their design and development workspace is AI-native, it does not elevate AI to the status of prescriptive decision-taker, but rather puts it to work behind the scenes of a centralised platform that aligns people across the concept-to-sample process, and that unifies the different, disconnected components that have introduced so much friction into those workflows.

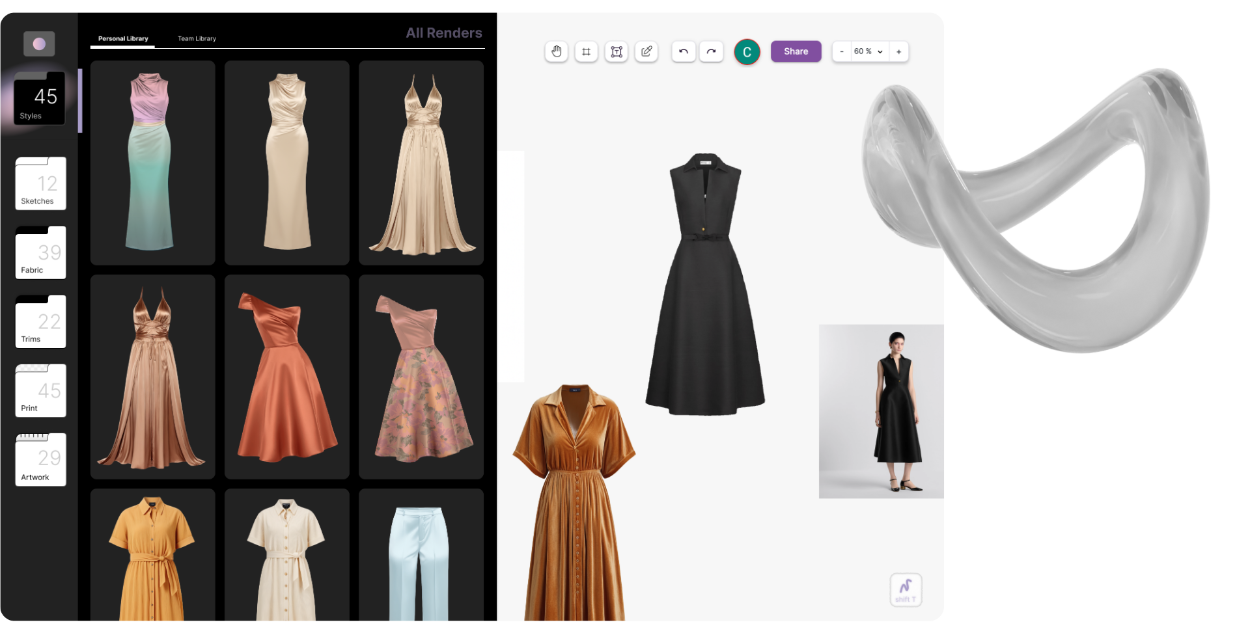

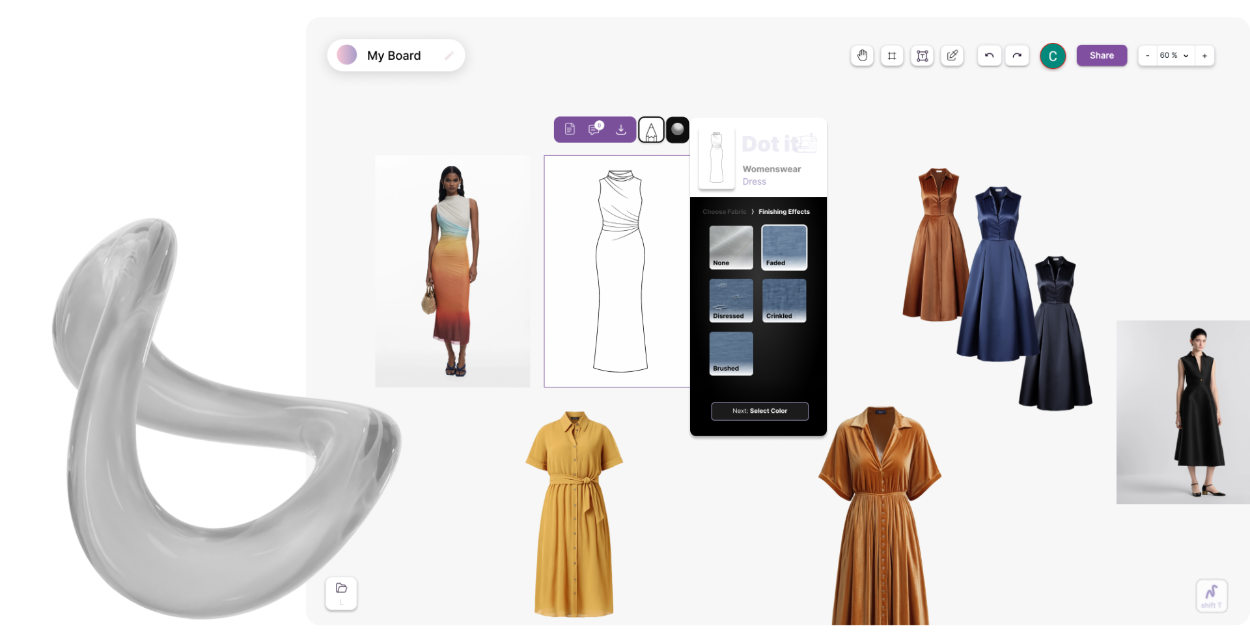

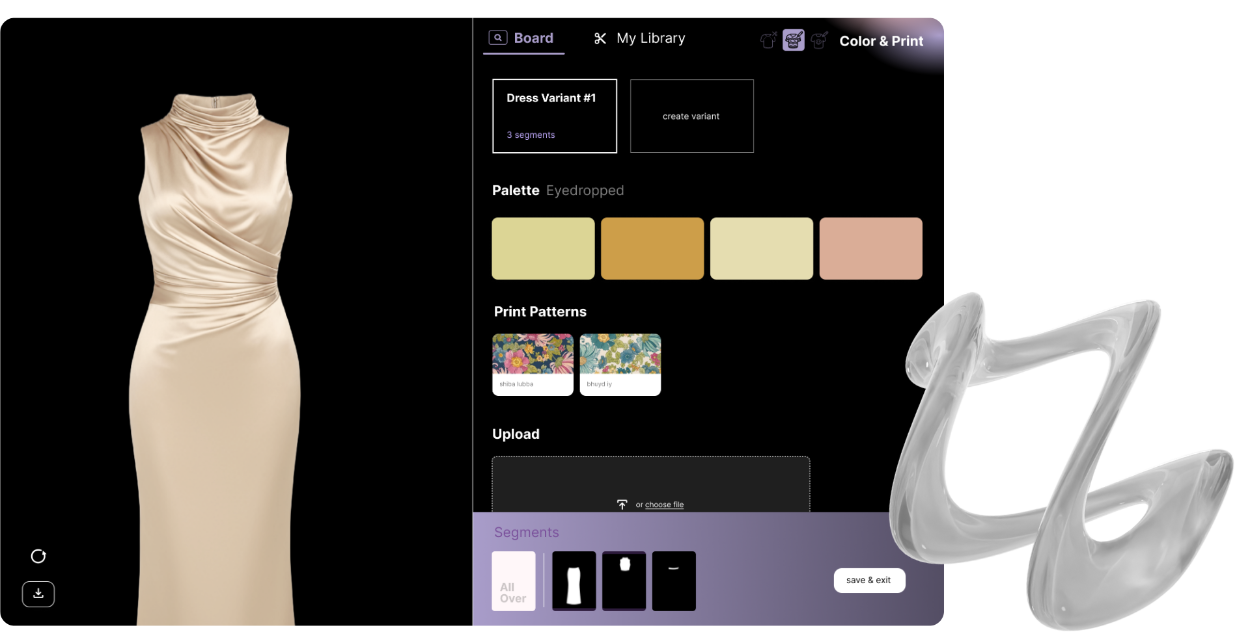

Like the household name off-the-shelf tools, a central pillar of Make The Dot is a flexible, extensible, freeform collaborative canvas with easy access to everyday image manipulation and organisation features. But unlike those solutions, Make The Dot’s approach prioritises an accountable, non-destructive import process where inspiration saved from the open web is captured with sources and metadata. And unlike standalone boards, the team at Make The Dot have prioritised integrated fabric and colour libraries, making sure that decisions taken within the canvas are based on accurate data.

Make The Dot also applies AI in service of then bringing these different elements together, and empowering product teams to translate design sketches, materials, colours, prints and other components into realistic, accurate product renders. The platform then also provides granular edit capabilities, including the ability to mask and separate components, so that teams can collaborate, comment on, and iterate in a single space, on a single feature of a garment, with complete control. And when it comes to then presenting styles and collections, Make The Dot brings together the ability to render those products on-model, with full control over lighting, poses, and other key attributes.

The next step of the concept-to-sample workflow – translating those accurate product and model renders into real samples – is something the Make The Dot team have as a top roadmap priority, with integrations into enterprise systems like PLM already present today, and a flow right the way from concept to technical specifications in-scope for the near future.

Rather than being a purely generative tool or another additive enterprise system, Make The Dot believe that the value of their platform will be measured in precisely the places that value leaks out within the current technology stack: the spaces between individual steps in the workflow, and the gaps between the different people who need to contribute to that journey.

As a result, as powerful as Make The Dot’s core capabilities are apparently proving for design and development teams, their benefits also extend beyond the core concept-to-sample process and into sales and customer engagement – potentially enabling brands to launch additional collections without increasing headcount, and allowing not just internal teams but retailer-facing sales functions to iterate on concepts and samples to further increase the chances of adoption.

All of which should be a reminder of the difference between a design tool intended to provide inspiration, and a design and development environment that has the potential to deliver value in all the same areas that waste and overhead are measured in the typical concept-to-product process today.

As with any platform that promises to consolidate tools, there is always the potential for cost-cutting, but the value of a platform-first approach to AI, like the one Make The Dot have developed, is likely to be judged differently.

As The Interline wrote earlier this year, in an article focused on the ability of AI to bring structure to industry workflows, “fashion’s inherent complexities, uncertainties, and inefficiencies, as appealing as they might look from the outside, end up in waste, financial loss, and a state of perpetual reactivity and firefighting”. And a platform that is able to centralise processes, deliver on the promise collaboration, and provide a one-stop, AI-native pipeline that converts creative ideas into market-ready products with as little friction as possible, is going to be judged by its ability to cut that waste, minimise those losses, and put fashion brands back on a proactive, productive footing.

Through that lens, as incredible as the results of image generation are, the real return of AI could be on a completely different level.

From that vantage point, 2026 is set to be not just a year of outward disruption, but also an uncommon opportunity for design and development teams to fundamentally re-tool their operations as part of a wider technology sea change. Where the last twelve months have been defined by pilots, experiments, and isolated AI initiatives, the next twelve are the chance for AI-native workflows to become concrete – to take their place at the heart of product creation, and to exert a measurable influence over final product outcomes.

For a platform like Make The Dot, the brief is clear: to make a difference not just to where concepting, design, and development happens, but to change the concept-to-sample journey at an operational level. And the opportunity to translate that mandate into action is now.

Find out more about the features of Make The Dot, or explore the company’s case studies. For more on the “application era” of AI, download The Interline’s AI Report 2025, or sign up for the upcoming “Entering The Application Era” live online event.