Fashion and beauty are facing an uphill engagement challenge: creating meaningful, tangible experiences that captivate and convert eCommerce visitors who are fatigued by generic, static, web content, and who are increasingly choosing to mediate their online journeys through AI apps, rather than coming to brands directly.

Many fashion, beauty, and consumer goods companies actually already hold the key to tackling this challenge: digital twins of products, and the experiences that can be built from them, and then deployed across both traditional web channels and the emerging frontier of the agentic internet. But scaling how quickly companies can create those 3D representations, how effectively they can manage those assets once they exist, and how widely and easily they can distribute them, is fast becoming a critical bottleneck that traditional digital product creation (DPC) tools, processes, and platforms haven’t been able to break.

Lately, companies have experimented with employing AI at the edges of this pressure point, as a way to create image, video, and written content, or as an enhancement for 3D pipelines. But there is significant untapped potential for AI to change the way brands think about creating 3D content, how they approach sharing it with consumers and capturing usage insights in return, and, overall, how to obtain value from creation and distribution pipelines that are scalable, affordable, and that meet the demand for differentiation.

To understand how fashion arrived at this mismatch between 3D demand and supply, and the role that AI is set to play in supercharging not just the creation bottleneck but the entire content and engagement journey, The Interline partnered with Threedium.

Already a forward-thinking company when it comes to the use of 3D to transform web experiences, trusted by giants like LVMH, L’Oreal, Tapestry and more, Threedium is now making big strides into what it refers to as “the world’s first agentic spatial commerce and intelligence infrastructure,” designed to accelerate every part of the new content ecosystem with a logical combination of 3D, AI assistance and automation.

These pioneering steps also include a homegrown AI model, and a unique foray into the unifying layer of Model Context Protocol – which The Interline has previously written about as the next stage of the AI-native web, and which floats the promise of allowing digital twins to be centralised and then distributed to both traditional web scenarios and to the spectrum of emerging AI-native platforms, putting natural language interfaces and 3D content side by side.

This is potentially a transformative pitch for not just 3D and DPC teams, but for a wider industry that is feeling intense pressure to create, communicate, and generate revenue from new experiences without also linearly scaling headcount and operating costs.

The web is changing. We’ve covered this pretty extensively at The Interline from a search and discovery point of view, but the near-term challenge of being found is actually a very narrow window on a much wider, more durable redefinition of how the creation and consumption of online content – especially branded product content – is shifting.

While brands are currently laser-focused on making sure shoppers continue to arrive at their lifestyle pages and PDPs in the age of AI and agentic search, the far deeper question is what those visitors actually want to spend time engaging with, and what it means to create that meaningful content at speed and scale.

Right now, thanks to the breakneck roll-out of generative text, image, and video models, traditional, passive content is trending towards genericism and statistical averages, and some consumer cohorts are turning away from AI-generated media as a result. In an endless sea of extremely similar written and visual content, differentiation is difficult to build – and website visitors who arrive at unremarkable or inauthentic content will quickly churn.

One of the potential foils against this slide towards content commoditisation and fatigue is for brands to innovate within the constraints of static web content, placing a heavy emphasis on human handiwork, organic creativity, and authenticity in established modalities.

The other, more profound, transformation is to stand out by embracing a different medium, turning passive engagements into active ones by making product-accurate content that’s interactive, tangible, sticky, and that builds a more multi-dimensional touchpoint between the shopper, the SKU, and the brand.

We’re talking, of course, about 3D. From explode-out product explorations to configurators and personalisation engines, brands and retailers have already found strong business cases for exploiting the native 3D rendering capabilities of the web to publish and promote more complete, more compelling representations of their products. And those capabilities have been quietly growing as standards and deployment pipelines have solidified, with fidelity and flexibility set to pick up even further with the progress of WebGPU support in major browsers, the growing graphics grunt of most consumer devices, and potentially the ability for the AI chat apps that have come to mediate a lot of internet interactions to natively render 3D content.

A shopping experience that’s defined by hero 3D content is not, we need to be clear, a speculative thing, or an idea that’s somewhere around the corner. This isn’t the wooly promise of an eventual metaverse. Right now, putting products in front of people using interactive 3D assets, instead of static content (AI-generated or created by human hand) provides a proven uplift in the metrics that count for apparel, beauty, footwear, and accessories eCommerce strategies.

According to Threedium, our sponsoring partner for this story, substituting 3D product representations for static images translates into markedly longer session duration (the online equivalent of in-person dwell time,) a 67% increase in engagement, 45% higher conversion rates from exposure to purchase, and a 25% uptick in omnichannel revenue.

So can we infer that simply creating more 3D content, and putting it in front of more shoppers, is the answer to all fashion and beauty’s content engagement, conversion, and monetisation problems?

In one sense: yes. Very much so.

The edge provided by putting digital twins of products in front of consumers is tangible and real. The market for 3D content on the web is scaling quickly, with growth rates of more than 12% year-over-year, and a predicted market value of more than $1 billion USD by 2033.

And the same content also has potentially even more significant value behind the scenes, with feature-complete, accurate, interoperable 3D assets being positioned as the best way for internal teams and external partners to communicate, collaborate, and align on the decisions that drive aesthetic, functional, and commercial product outcomes.

As a matter of fact, working with their new AI-centric 3D suite in pilots with some of the world’s biggest brands, Threedium have found that working in a 3D and AI-native way can cut the cost of designing new concepts by 18%, as well as improving conversions (when the same philosophy is extended downstream to the consumer) by 20%.

But in another sense, judged from behind the scenes, in the offices of brands big and small whos overstretched design and content teams are already staring down a bunched-up pipeline, that edge is proving very difficult to sharpen. In order to create digital twins of products at scale, and to then manage, organise, distribute, and realise value from those digital twins, brands have had little choice other than to increase headcount, buy more 3D software licenses, and invest extensively in building out specialist skills at every step of the journey – from 3D creation to the roll-out of 3D assets across different sales channels.

Based on Threedium’s industry insight, those 3D teams can already find themselves spending up to 70% of their time reworking existing assets for different use cases (building different poly-count models, or LODs, for single uses), working to unclear standards and quality thresholds for specific applications, and building bespoke pipelines to connect different modelling, texturing, staging, and rendering tools.

So when you hear a company talk about “scaling” digital product creation initiatives, this is often what they really mean: finding new taps to try and turn to extract additive value from having 3D representations of the majority of their products, but then realising that this extension and expansion requires more time and talent at the top of the funnel.

To help ease those bottlenecks, many brands have supplemented their in-house 3D capacity and capabilities by hiring in the expertise of creative agencies and 3D studios, of which there are some especially strong examples. While these agencies also introduce their own costs, they have proven to be an important crutch that’s allowed fashion brands to scale up content creation without massively increasing their overheads. But even these agencies would not describe themselves as long-term replacements for a more streamlined 3D ecosystem and a less labour-intensive approach to building, managing, and then deploying digital twins of products in a way that delivers one or more measurable returns as judged by established retail KPIs.

This common bottleneck between the hunger for 3D and fashion’s ability to supply it is also directly behind the elephant in the room: the rush to evaluate replacing time and labour-intensive 3D with AI. One of the key catalysts for the way fashion in particular is thinking about AI, is because it promises a smoother, faster, and potentially much cheaper way to meet the demand for consumer and business-to-business content of all types – 3D potentially included.

To understand this drive, we only need to consider the cost of 3D projects with interactive web content as their output, which, according to Threedium’s direct experience are typically initiatives involving up to fifteen 3D designers, with an average time to market of at least eight weeks, and a pricetag in the tens of thousands of dollars. If we contrast this headcount, time, and cost against the price of even the top-tier general-purpose AI subscriptions, the time from prompt to visualisation, and the reduced need for parallel workers, the impact is, at least in theory, dramatically reduced.

But as we’ve established, and as the market has shown, over-indexing on AI for content creation (i.e. relying solely on generative images or video that have no foundation in 3D) can be destructive to consumer sentiment and, crucially, often falls short of the level of product accuracy that brands need to showcase their content in ways that actually stand out, rather than just standing in for traditional assets.

Or, to put it another way, fashion and beauty are quickly discovering that AI cannot replace 3D. But there’s growing optimism that it can serve as a way to dramatically reduce the time and the cost of creating digital representations of products that can both transform in-house prototyping, can become the much-needed fuel for a differentiated model of consumer engagement, whether those consumers are discovering the brand on the web, and can potentially change how and where those 3D representations show up.

That optimism has, over the course of the last year, spurred the team at Threedium to try and build what they refer to as the “The Holy Grail of Spatial Computing”. Working in partnership with major fashion houses, 3D vendors, PLM platform owners, and cloud infrastructure providers, Threedium have been quietly trying to chart a different way forward for creating 3D. And working with the fast-emerging Model Context Protocol standard, the company has come up with an approach and an interface that allow large language models to directly interact with databases, libraries, and assets to generate, segment, manipulate, and even help distribute 3D content.

But what does that look like in practice? From a capability, cost, content creation and distribution point of view, how does it map to what product and content teams need to accomplish? And what new experiences and opportunities does the combination of 3D and AI potentially unlock?

In the first instance, the new Threedium ecosystem platform is hyper-focused on streamlining and creation of 3D product representations that are on-brand, on-model, accurate, but scalable to build without compromises.

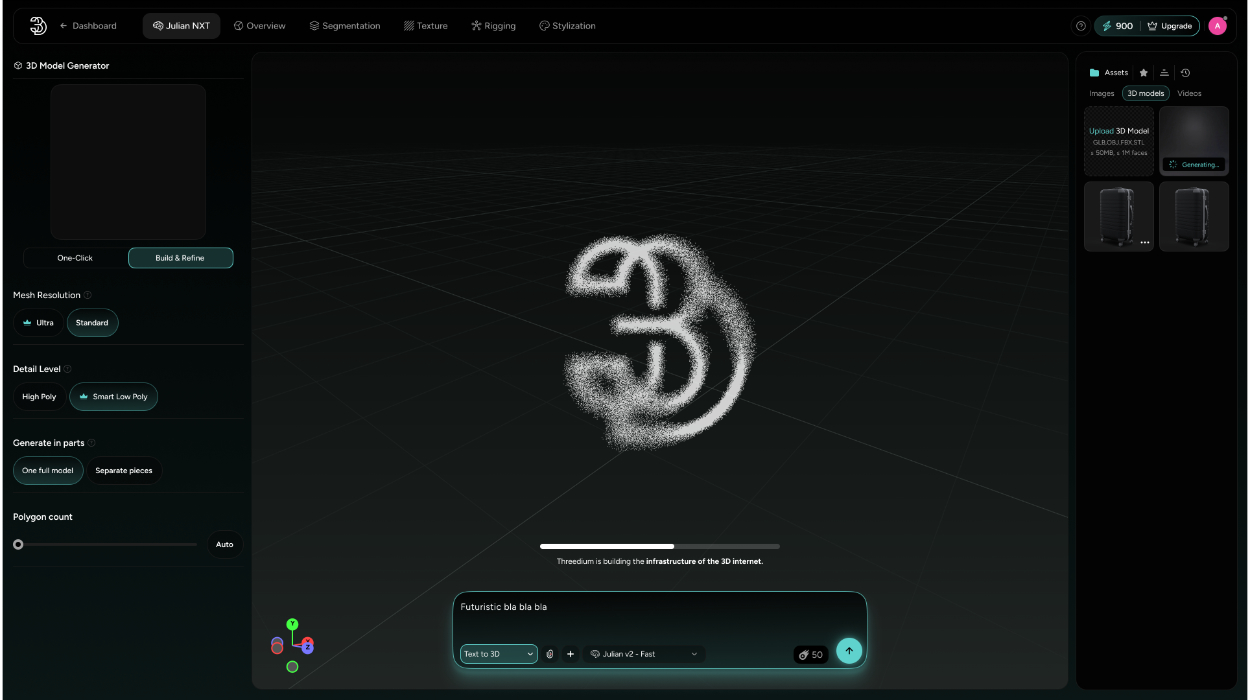

This work manifests itself in a suite that the team refer to as the “AI Studio,” a suite of tools designed to allow all kinds of users – not just 3D professionals – to generate hero-quality 3D content from as little as a sketch or a single image. And for products where more photography is already available, the level of automation that Threedium promise allows anyone to convert four shots into high-fidelity 3D models ready for the most demanding marketplace applications.

The same philosophy is also behind the platform’s aim to allow users to deploy no-code configurators based on 3D assets (either authored using traditional, labour-intensive 3D, or through the company’s own AI Studio), with automatic channel and device optimisation for web, mobile, XR, smart glasses and more.

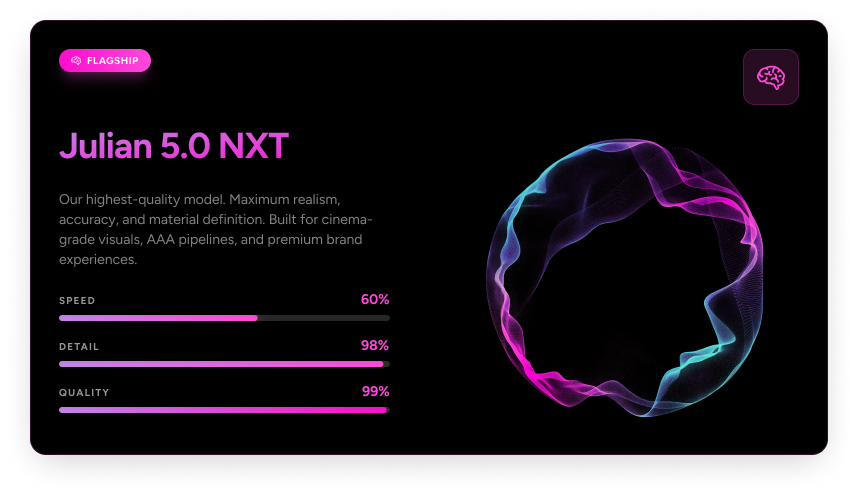

Behind both of these applications is a homegrown flagship model that the Threedium team have dubbed JULIAN NXT, which is specifically trained to convert image, sketches, and ideas into digital twins that the company says are fit for any use case – from 3D printing and web deployment, to offline CG and Unreal Engine / UEFN for Fortnite.

The new Threedium platform is also intended to be a tool for deploying, monitoring the use of, and then creating value from 3D assets. Once they have been deployed to the web – or pulled into AI applications, in some scenarios – Threedium allows brands to capture user behaviour (which product sections shoppers examine the most closely, how they spin and zoom in on a 3D object) and then to feed the data from these interactions back into both commerce teams – to further optimise engagement and conversion – and design teams, so that user intent can inform the creative decisions behind future collections. In the long term, the company also sees pipelines for the deployment of digital twins in training humanoid robots, both in the form and feel of 3D products and in the shape of human sentiment around those products.

But achieving these kinds of applications at the cost, speed, and human resource benchmarks the company has set, also relies on some major breakthroughs behind the scenes.

Chief among these is another key blend of 3D and AI, which deploys AI to unlock auto-segmentation of 3D models, allowing fixed assets to be separated into parts without the direct intervention of 3D creators, and without those assets having been designed as modular to begin with. Once this process is complete, non-technical users can then use an easy brush tool to choose specific product parts for configuration, exploration, and customisation – for both end consumers on the web, and for in-house teams.

Unarguably the most impressive application in the new suite, though, brings all of these elements together: working in partnership with a major brand, and with the team behind Amazon Web Services, Threedium have built a natural-language-driven native AI configurator that allows internal and external audiences to interact with an LLM using a natural language voice interface, and to issue non-technical commands to perform what would otherwise be extremely specialist work.

To fully appreciate the scope of this audio mode, it helps to interact with it first-hand, as The Interline had the opportunity to do ahead of the launch, but it has the potential to feel like the same kind of “magical” tipping point that ChatGPT did a few years ago.

For internal terms looking to prototype by iterating on an existing silhouette or platform (in the case of footwear) it’s hard to overstate just how much simpler a process it could be to allow AI to generate a model of a product, to segment it, and to then give simple voice commands to a model that then puts them into practice. “Change the tongue of this shoe to blue” is a simple example, and one that the audio mode can execute easily, but it’s also an interaction that relies on and simultaneously hides a lot of different, complex pieces under the hood.

And as we come full circle and consider the challenge of creating differentiated content experiences in the age of the agentic web, it’s hard to overstate how impactful a consumer-facing configurator or product discovery engine built on these foundations could be.

For context, consider how significantly the world of knowledge work has changed with the integration of large language models into existing platforms and workflows in a way that allows users to “talk to their documents,” and how the work of creating and manipulating images (or coding applications) has shifted from specialist roles and solutions to non-specialist users interacting with chatbots.

It does not take a huge leap to consider a similar transition from the creation of 3D being a heavily centralised, specialised, and scarce resource that has fallen behind the pace of demand – with AI streamlining the creation of 3D models, allowing them to be separated into their constituent parts and manipulated using traditional tools or natural language, then allowing them to be deployed and monitored, or even making them accessible to AI applications through a Model Context Protocol server, so that 3D content can be found and surfaced as well as being purposefully embedded.

All of the different pieces that Threedium are launching are driven by the singular realisation that informed this article: that the 3D creation, distribution, and monetisation bottleneck evidently needs widening, and that AI and 3D are now seemingly ready to combine in a foundational way.

For fashion, and other product-centric industries with a burning need to stand out in a sea of undifferentiated content, the demand is clear: 3D that’s available to everyone, that can show up everywhere it’s needed, that’s cost-effective to produce, and that can power genuinely transformative modes of manipulating and interacting with unique content in a way that’s previously been reserved for technical professionals.

To explore Threedium’s vision for a new platform approach to 3D and AI, where large language models can autonomously build, manage, and monetise digital twins, visit their website.

For more on the evolution of 3D and digital product creation workflows, including the areas where it crosses over with AI, download The Interline’s DPC Report 2026 later this week.